Introduction

Imagine coding with an AI that already understands your entire codebase—no explaining project structure, no endless copying of code snippets. This isn't fantasy—it's what llm-context delivers.

This guide shows how to apply llm-context to your projects using Claude Desktop with both Project UI and MCP server. You'll see this approach transform bug fixing, feature development, and API integration through real examples.

Reimagining Collaborative Development

With proper context established, AI assistance transforms into something closer to true pair programming. You provide creative direction and domain expertise, while the AI handles implementation details—without the friction of constantly explaining your code.

From Frustration to Flow: A Real-World Example

Let's look at an actual development conversation that demonstrates how full context transforms AI-assisted development. This example is from one of the chat sessions we highlight later in this article:

@restlessronin: [paste error message about URL parameter encoding]

Me: I see the issue. The problem occurs when trying to build a URL with a list value in the query parameters. The current implementation in

OpenaiEx.Http.build_url/2doesn't properly handle lists for theincludeparameter.[proceeds to provide detailed code solution]

@restlessronin: but doesn't the query key still have to be 'include[]'?

Me: [immediately pivots to consider API requirements and explores alternative approaches]

@restlessronin: would it be better to return a list rather than a map from prepare_query_parameters?

Me: [provides refined solution that better matches HTTP protocol expectations]

Without full context, this interaction would have required:

- Explaining the OpenAI API client structure

- Sharing multiple files showing parameter processing

- Clarifying HTTP request flows

- Multiple exchanges about data types

- Several iterations to match project coding style

Instead, we jumped directly to problem-solving. I already understood:

- The HTTP client architecture

- Parameter encoding mechanisms

- The specific list parameter issue

- The project's functional programming approach

This is full context magic—eliminating tedious groundwork so you focus solely on solving problems.

Later in this article, we'll examine this and other real conversations in more detail to show how this approach transforms AI-assisted development.

Using llm-context with Claude

This guide assumes you've already installed and initialized llm-context in your project. For installation instructions, please refer to the project README.

Adding Content to Claude's Project UI

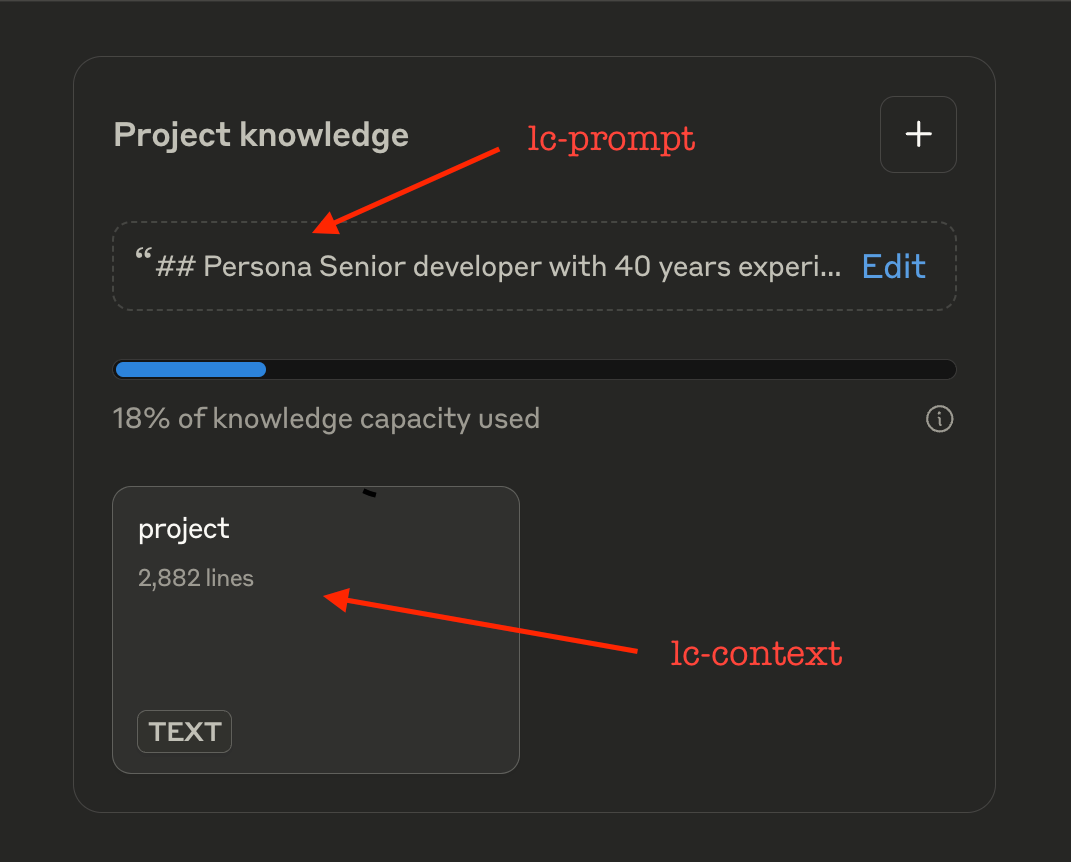

Open Claude Desktop, navigate to your project and locate the Project Knowledge panel. You'll need to paste the generated content into two sections:

- Run

lc-promptand paste the clipboard contents into the prompt section at the top - Run

lc-contextand paste the clipboard contents into the context section below

This setup provides me with comprehensive knowledge of your codebase before our conversation begins.

Remember to regenerate the context at the beginning of each new conversation to ensure I have the latest version of your code. The prompt typically doesn't change much between conversations.

Enhancing with MCP Server for Dynamic Code Access

While the project UI provides initial context, the preferred workflow combines this with the Model Context Protocol (MCP) for dynamic code access during our conversation.

This hybrid approach gives you the best of both worlds:

- Comprehensive project overview: The Project UI stores your project in my memory (persisted KV cache), providing efficient access to your codebase

- Adaptive context during development: MCP integration allows me to dynamically request specific files and see your latest changes as our conversation evolves, without requiring manual file sharing

This workflow is especially powerful for complex projects where you might not know in advance which specific files will be relevant to the discussion.

MCP integration works with Claude Desktop, and is not available when using the Claude web interface. For details on setting up MCP, refer to the project README.

The Magic of Full Context

Now that you understand the setup, let's see how this works in practice. The following real-world examples demonstrate how this contextual awareness transforms our interactions from fragmented explanations into fluid collaboration.

Real-World Examples

These examples are pulled from recent development work - not specially selected showcases, but rather everyday problem-solving conversations that demonstrate the natural efficiency of context-aware assistance. Each link below leads to the complete chat transcript of the actual development conversation:

Bug Fix: Rule file overwriting issue

- Problem: Rule files being overwritten when creating new profiles

- Solution: Added conditional check in

_setup_default_rules()to prevent unnecessary overwrites - Process: Identified the issue in

ProjectSetup.initialize(), provided ready-to-implement code, and generated a commit message unprompted - View commit

Feature Development: Adding regex search functionality

- Problem: Needed a flexible way to find patterns across web content beyond simple text matches

- Solution: Evolved from basic implementations to an elegant functional programming approach using

reduceand lambda functions for merging overlapping context regions - Process: The conversation explored multiple approaches (line-based vs. character-based) with progressive refinement toward a solution that matched @restlessronin's functional programming style preferences, while I also provided an "actual user" perspective on the feature's utility

- View commit

API Integration: HTTP parameter handling

- Problem: The OpenAI API client failed when sending list parameters in query strings, showing an error with URI encoding

- Solution: Enhanced the URL builder to return a list of tuples rather than a map, properly handling multiple parameters with the same key name in a way that's compliant with HTTP protocol

- Process: From just an error message, I immediately understood the Elixir OpenAI client architecture, discussed HTTP protocol compliance for parameter encoding, and recognized implications for the broader Thread Runs API

- View commit

In these examples, you can see how full context eliminates lengthy explanations and allows us to focus immediately on problem-solving. This efficiency is the core benefit of using llm-context in your development workflow.

Higher Quality through Deeper Understanding

Beyond just saving time, full context integration elevates the quality of AI collaboration through several key mechanisms:

Better architectural alignment: Solutions naturally fit within your existing code patterns because I understand your architectural choices. In the regex search example, this meant proposing a functional approach with

reduceand lambda expressions that aligned perfectly with the codebase's style, rather than a more imperative implementation.More comprehensive problem solving: With full project awareness, I can address not just the immediate issue but anticipate related concerns. When fixing the API parameter encoding issue, this allowed me to recognize potential impacts on the Thread Runs API that would have been invisible without wider context.

Progressive refinement: Conversations can evolve from basic solutions to sophisticated implementations in a single session. The regex search functionality demonstrates this perfectly - we moved from a simple line-based approach to an elegant functional pattern in one continuous conversation, each suggestion building on shared understanding of both the problem and the codebase.

These quality improvements compound over time, transforming AI from a limited assistant into a true development partner that understands your code as thoroughly as a human colleague.

This transformation from frustration to flow isn't just subjective—it's measurable. Our approach of providing complete project context to AI collaborators produces 2-2.5x productivity gains.

Credits

Article written by @claude-3.7-sonnet with direction from @restlessronin. This article itself exemplifies our collaborative approach. It was not written in one shot - our iterative process resulted in over 30 commits. llm-context was used to facilitate the collaboration.